Higher Performance Migrations to Google Cloud VMware Engine

Migrating workloads from on-prem to cloud can be a major headache using unpredictable, unreliable internet connectivity. A new whitepaper quantifies the difference private connectivity can make.

Cloud adoption is ever increasing with enterprises “lifting and shifting” applications from on-premises infrastructure into their clouds of choice. Migrating workloads, which involves transferring large amounts of data–sometimes petabytes worth, can lead to numerous headaches, including rising costs, the high number of person-hours devoted to ensuring a migration runs smoothly, and the risk of outages of mission-critical business applications.

SPJ Solutions, one of VMware’s top consulting partners in NSX*, has authored a whitepaper that summarizes the findings of a study that used an on-premises lab environment to analyze performance related to on-prem migrations to Google Cloud Platform (GCP) using VMware virtual machines. SPJ Solutions was able to measure how fast such migrations could be performed using Megaport for private connectivity and GCP’s suite of networking tools.

*NSX is VMware’s network virtualization and security platform that enables cloud networking that’s software-defined across data centers, clouds, and application frameworks.

Read the SPJ Solutions whitepaper on Google Cloud VMware Engine and Megaport now

End-to-end private connectivity with Megaport

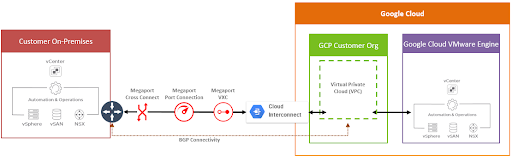

The lab environment was created with end-to-end connectivity from an on-premises environment to Google Cloud VMware Engine (GCVE) using a 10G Megaport Port, a Virtual Cross Connect, and Google Cloud Interconnect. SPJ Solutions used the Megaport portal to provision the Layer 3 connectivity to GCP to facilitate the migration.

The test plan was built to mimic a real-world scenario using Windows as one of the most widely used Operating Systems (OS) in IT and Ubuntu to represent a Linux OS. TinyCore Linux virtual machines were also used to test the end-to-end connectivity and the volume of the migration.

Migration test scenarios

SPJ Solutions ran several test scenarios with virtual machine migrations from on-prem to Google Cloud Platform.

- Cold migration using HCX Bulk, which might be useful for customers with less business-critical applications that can be shut down during a migration. HCX Bulk allows users to schedule a group of machines to be migrated at a certain time and date.

- Live or hot migration using HCX vMotion and vMotion, which eliminates issues caused by shutting down virtual machines, but lengthens migration time.

- On-prem to cloud–the most common customer scenario.

- Cloud to on-prem–a relatively uncommon scenario.

Read how Flexify.io used Megaport to lower migration costs for customers by as much as 80%.

Benchmarking connectivity

Before commencing with the test scenarios, SPJ Solutions performed end-to-end connectivity tests to identify potential bottlenecks in the following areas:

- Between physical servers and edge devices

- Between edge devices to the physical Megaport Port

- Between the Megaport network and Google Cloud Platform

Precautionary methods were also taken to ensure that test results were not affected by external factors such as bandwidth utilization and virtual machine storage location.

Results

The following table shows the results of migrating 50 virtual machines from on-prem to GCP and from GCP to on-prem. You’ll see that throughput was consistently high and migration times were also consistent because of reliable, end-to-end private connectivity from Megaport.

| Test # | Migration Method | Direction | VM Type | # VMs | Run 1 Time (hh:mm) | Run 2 Time (hh:mm) | Average Throughput vCenter (Mbps) |

| 1 | HCX Bulk | On-prem -> GCP | Windows VMTinyCore VM | 5050 | 2:06* | 2:12* | 2183 |

| 2 | HCX Bulk | GCP -> On-prem | Windows VMTinyCore VM | 5050 | 2:13* | 2:08* | 2196 |

| 3 | HCX Bulk | On-prem -> GCP | Ubuntu VM | 50 | 0:57* | 0:59* | 1859 |

| 4 | HCX Bulk | GCP -> On-prem | Ubuntu VM | 50 | 0:53* | 0:52* | 1585 |

| 5 | HCX vMotion | On-prem -> GCP | Windows VM | 50 | 6:18 | 7:09 | 253 |

| 6 | HCX vMotion | GCP -> On-prem | Windows VM | 50 | 10:29 | 9:44 | 260 |

| 7 | HCX vMotion | On-prem -> GCP | Windows VMTinyCore VM | 5050 | 10:49 | 11:30 | 248 |

| 8 | HCX vMotion | GCP -> On-prem | Windows VMTinyCore VM | 5050 | 14:27 | N/A | |

| 9 | HCX vMotion | On-prem -> GCP | Ubuntu VM | 50 | 7:21 | 6:16 | 147 |

| 10 | HCX vMotion | GCP -> On-prem | Ubuntu VM | 50 | 5:26 | 5:32 | 128 |

| 11 | HCX vMotion | On-prem -> GCP | TinyCore VM | 50 | 0:14* | 112 | |

| 12 | HCX vMotion | On-prem -> GCP | Windows VMUbuntu VM | 5050 | 11:53 | 11:09 | N/A |

| 13 | HCX vMotion | GCP -> On-prem | Windows VMUbuntu VM | 5050 | 15:02 | 13:09 | 108 |

| 14 | vMotion | On-prem -> GCP | Windows VM | 50 | 1:49 | N/A | |

| 15 | vMotion | GCP -> On-prem | Windows VM | 50 | 1:48 | N/A | |

| 16 | vMotion | On-prem -> GCP | Windows VMTinyCore VM | 5050 | 2:02 | N/A | |

| 17 | vMotion | GCP -> On-prem | Windows VMTinyCoreVM | 5050 | 1:50 | N/A |

Conclusion

SPJ Solutions’ tests running migrations from on-premises to GCVE using Megaport connectivity show that this architecture can provide an optimal cloud migration experience. As shown above, it can adapt to a number of different migration use cases, and can make migrations easier and faster in a disaster recovery or “lift and shift” scenario.